About me

I am a postdoctoral researcher (postdoc) at the Chair for the Methods of Machine Learning at the University of Tübingen, advised by Philipp Hennig. Before that, I did my Ph.D. in the same group as part of the IMPRS-IS (International Max Planck Research School for Intelligent Systems). I am working on more user-friendly training methods for machine learning. My work aims at riding the field of deep learning of annoying hyperparameters and thus automate the training of deep neural networks.

Prior to joining the IMPRS-IS, I studied Simulation Technology (B.Sc. and M.Sc.) and Industrial and Applied Mathematics (M.Sc) at the University of Stuttgart and the Technische Universiteit Eindhoven respectively. My Master’s thesis was on constructing preconditioners for Toeplitz matrices. This project was done at ASML (Eindhoven), a company developing lithography system for the semiconductor industry.

- Deep Learning

- Training Algorithms

- Stochastic Optimization

- Benchmarking

- Artificial Intelligence

-

Postdoctoral Researcher, 2022 -

University of Tübingen

-

Ph.D. in Computer Science, 2017 - 2022

University of Tübingen & MPI-IS, IMPRS-IS fellow

-

M.Sc. in Industrial and Applied Mathematics, 2015 - 2016

TU/e Eindhoven

-

M.Sc. in Simulation Technology, 2015 - 2016

University of Stuttgart

-

B.Sc. in Simulation Technology, 2011 - 2015

University of Stuttgart

News

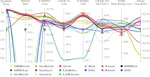

- June 2023: We published the first paper from the MLCommons' Algorithms working group on arXiv titled "Benchmarking Neural Network Training Algorithms". In it, we motivate, present, and justify our new AlgoPerf: Training Algorithms benchmark. We plan to issue a Call for Submissions for the benchmark soon.

- July 2022: I succesfully defended my Ph.D. thesis with the title Understanding Deep Learning Optimization via Benchmarking and Debugging! I will continue to work as a postdoctoral researcher at the University of Tübingen.

- September 2021: Our paper “Cockpit: A Practical Debugging Tool for the Training of Deep Neural Networks” has been accepted at NeurIPS 2021. In this work, we present a new kind of debugger, specifically designed for training deep nets.

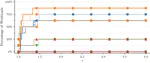

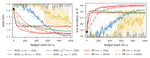

- May 2021: Our work “Descending through a Crowded Valley - Benchmarking Deep Learning Optimziers” has been accepted at ICML 2021. In it, we present an extensive comparison of fifteen popular deep learning optimizers.

- April, 2021: I have been elected as a co-chair for the MLCommons working group on Algorithmic Efficiency together with George Dahl. The working group will develop a set of rigorous and relevant benchmarks to measure training speedups to neural network training due to algorithmic improvements, focusing on new training algorithms and models.

- September & Oktober 2020: I have been distinguished as a top reviewer for ICML 2020 and received a Top 10% Reviewer award for NeurIPS 2020.

- May 2019: Our paper “DeepOBS: A Deep Learning Optimizer Benchmark Suite” has been accepted at ICLR 2019. In the paper, we present a benchmark suite for deep learning optimization methods. I will be at the conference from 06th through 09th May in New Orleans, USA.

Publications

Workshops, Talks & Summer Schools

Teaching

Education & Experience

Working on making deep learning more user-friendly by focusing on the training algorithms.

Advisor: Prof. Dr. Philipp Hennig

Doctoral student in computer science.

Working on improving deep learning optimization at the Max Planck Institute for Intelligent Systems and the University of Tübingen in the International Max Planck Research School for Intelligent Systems (IMPRS-IS).

Supervisor: Prof. Dr. Philipp Hennig

Focus on numerics and mathematical applications.

Master’s thesis at ASML with the title “Approximation of Inverses of BTTB Matrices for Preconditioning Applications”.

Supervisor: Michiel Hochstenbach, Ph.D., TU/e

Focus on a broad education in mathematics, engineering, computer & natural science.

Bachelor’s thesis in cooperation with the Fraunhofer Institute for Industrial Engineering with the title “Analysis, evaluation and optimization of an agent-based model simulating warning dissemination”.

Supervisor: Prof. Dr. Albrecht Schmidt

Contact

- f.schneider@uni-tuebingen.de

- +49 7071 29 70852

- Maria-von-Linden-Str. 6, Tübingen, 72076

- Enter the AI Research Building and take the stairs to the second floor. Take the right wing and turn right after the glass meeting room to office 20-5/A10.